Voice clone technology has recently received a fair share of press, sometimes only for the best reasons. Notable artists and personalities have sued individuals and organizations for using such technology to impersonate them.

This guide explores the dark side of using voice cloning software, including the risks and ethical concerns associated with it. It also offers alternatives, such as Speaktor, that you can use for your needs.

How Does AI Voice Cloning Work, and Why Is It Risky?

Personalized voice clones for voiceover use artificial intelligence (AI) to identify and replicate a synthetic version of a person's voice.

People use these clones differently to make it seem like the voice belongs to the person being impersonated. They replicate the unique vocal characteristics of the person.

Think of the controversy surrounding Scarlett Johannesen's lawsuit against Open AI . She alleged they mimicked her voice without her consent for their voice-based chatbot.

How TTS With Voice Cloning Creates Deepfake Voices

The threat of deepfakes has been growing for a few years. This is because they come with many dangers. These include everything from election manipulation, scams, celebrity pornography, disinformation attacks, and social engineering, among several others.

Those who encounter manipulated or synthetic content often believe what they see or hear. This is because voice cloning is designed to replicate an individual's voice accurately, which often has damaging consequences.

Text-to-speech thus has a rather dark side when it is in the hands of those who do not care about the ethical use of such technology. They may also have malicious intent when using a voice cloning feature.

How Realistic AI Voice Clones Can Be Weaponized

As mentioned above, an AI voice clone generator can be weaponized in numerous ways. People use these voice models to affect an individual or even to manipulate large sections of a population.

A report in the Guardian stated that more than 250 British celebrities are among 4000 or so people who have been victims of deepfake pornography. A key aspect of making such pornography is the use of voice cloning technology. It impersonates the voice of an individual beyond their mere physical appearance.

These instances can seriously damage an individual's reputation since people often take what they hear or see at face value.

What are the Biggest Privacy or Security Threats of Voice Cloning?

As you have probably gathered at this point, the use of voice cloning brings with it immense privacy or security threats. It can violate the privacy of a person by using their voice without their consent. However, it can also be damaging at an organizational level. Scammers often use such tricks to piggyback on an unsuspecting executive to extract critical financial or personal data.

Social Engineering Attacks Enabled by Cloned Voices

While such scams can happen on a larger scale, you can also find them around you daily. You might be able to recall a time someone you knew called you saying they were arrested and needed money for their bail. They could have used voice recordings to replicate the original voice of a person. Do any such instances come to mind?

Such social engineering scams can affect you or your loved ones. Given the lack of sufficient legal remedies, individuals must always be vigilant. These scams are particularly dangerous because deepfakes often sound like the real deal.

For example, if a scammer knows how your loved one speaks, you would need help distinguishing between the natural person and their deepfaked voice.

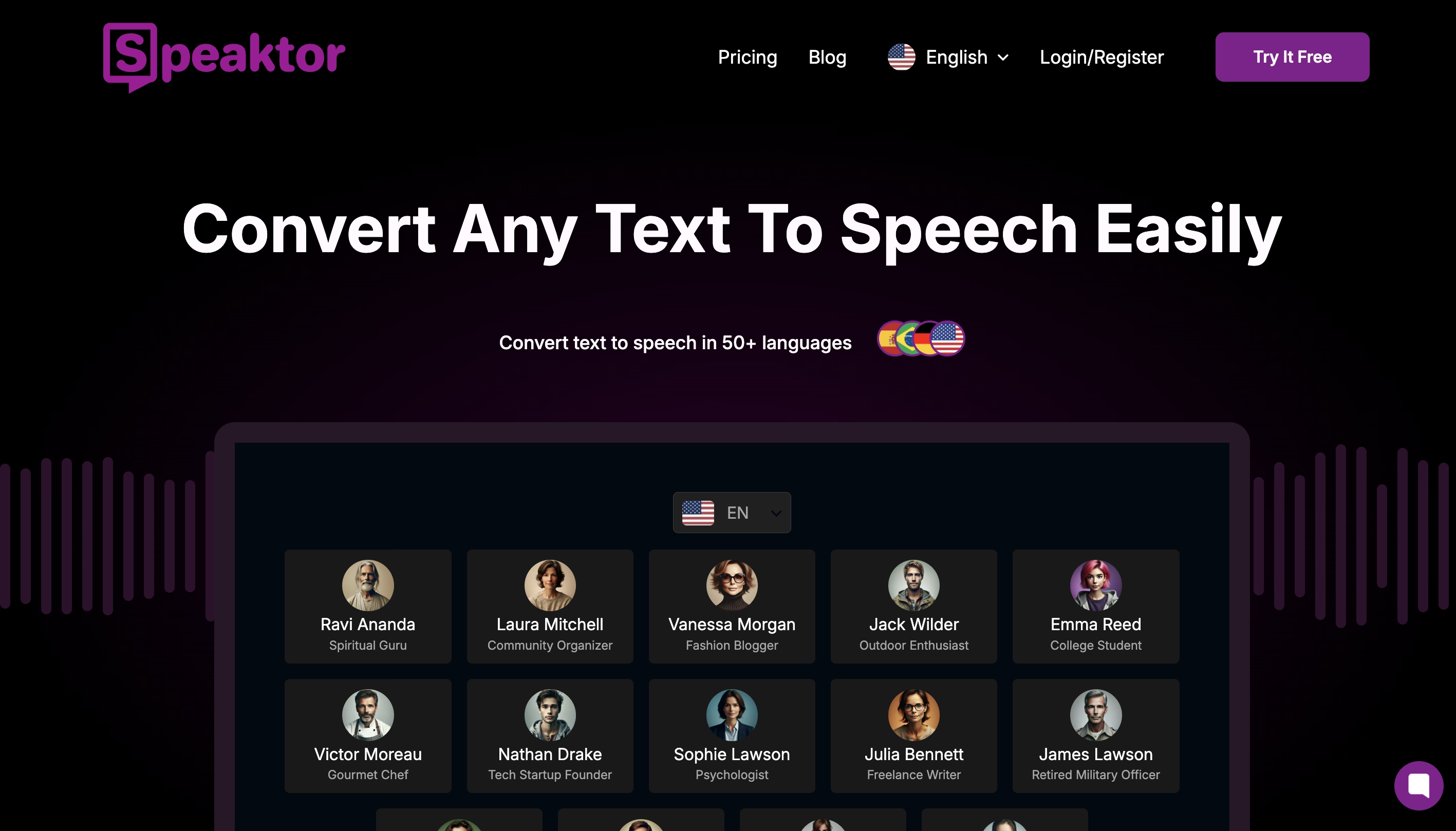

Why is Speaktor a Better Alternative to Voice Cloning?

The dangers of voice cloning are all but clear at this point. It can have damaging consequences when used unethically or maliciously. This is where an AI voice generator like Speaktor separates itself from other AI-powered voice cloning tools.

Speaktor is an AI-powered text-to-speech tool that uses speech synthesis and converts written content to high-quality spoken words. Speaktor uses voice data ethically without pretending to be any living person. It is perfect for both content creators and marketers to create AI-generated voices.

Secure and Realistic Non-Clone Voices

With natural-sounding AI voices, Speaktor does not rely on technologies like voice cloning to produce high-quality outputs. This entirely eliminates the risk of voice cloning and removes any ethical or privacy-related concerns you might have.

Speaktor uses text-to-speech technology for various purposes and ensures privacy and security.

You can use Speaktor to create a voiceover, a presentation, or greater digital accessibility across your marketing channels.

What are the Ethical Concerns of Voice Cloning?

Voice cloning technology brings with it several ethical and privacy-related issues that are amplified in the media.

The Problem With Personalized Voice Clones in the Media

The media offers the benefit of mass dissemination. The misuse of voice cloning technology can thus lead to identity theft. More importantly, it can lead to defamation and even erode public trust in individuals or organizations. This can happen if it is weaponized during troubled times or elections.

Besides the issues of defamation and identity theft, some of the other critical areas of concern include:

- Consent and Ownership: The ethical use of voice cloning technology requires the permission of the individual whose voice is replicated. This often does not happen, but it is crucial to ensure an individual's personal autonomy.

- Misuse: Voice cloning technology can be misused for social engineering, phishing scams, disseminating misinformation and disinformation campaigns, manipulating electioneering and voting patterns, and so much more.

- Preserving Individual Privacy: The lack of consent when using an individual's voice can violate their privacy. Thus, preventing the unauthorized use of a person's voice becomes of paramount importance.

How Can You Protect Yourself from Voice Cloning Fraud?

Individuals must take certain steps to protect themselves from voice cloning fraud. This is especially true given the risks and difficulty of taking legal action, as mentioned above.

Practical Tips to Avoid Falling to Cloned Voice Scams

If you want to ensure that you never fall prey to a voice cloning scam, there are a few practical tips you can follow. Some of these include:

- Use multi-factor verification for sensitive or financial accounts to minimize the risks of malicious individuals accessing your personal and financial data.

- Do not engage with callers who come across as suspicious. Avoid panicking if someone tries to leverage it to extract money from you.

- To ensure you are not being scammed, try to verify the caller's identity using a trusted medium or tool.

- Since your voice can be used to perpetrate scams or fraud, you should avoid using unknown or unreliable voice cloning tools.

- Choose secure services like Speaktor that do not require you to use your voice. It generates customized voices purely using AI. Consider using a reliable text-to-speech Chrome extension .

Conclusion

Despite its benefits, AI-powered voice cloning always carries the risk of being misused.

Further, the rise of deepfakes and an increase in cybercrime make it crucial that users rely on safer alternatives. These must use advanced AI without infringing on a person's privacy or impersonating them without their consent.

Speaktor offers non-cloned, high-quality TTS voices for audio files. You can use these voices to generate speech for all your content creation and marketing needs. It is an ethical and reliable alternative to traditional voice cloning technologies.

Dubai, UAE

Dubai, UAE