In the rapidly evolving realm of content creation, AI speech synthesis tools for natural voice generation have opened new possibilities for how audiences consume audio. This shift offers more than just convenience—today’s AI text to speech software not only transforms written content into lifelike voices but also provides creators with adaptability, emotion, and linguistic accuracy.

From brand storytelling to interactive e-learning, the impact of voice generation technologies continues to expand. Deciding on the perfect voice synthesis technology becomes essential for anyone seeking engaging, high-quality audio output that captures attention in business, education, or creative projects.

Understanding AI Speech Synthesis Technology

The landscape of voice synthesis has evolved dramatically with the advent of artificial intelligence and natural language processing. Before diving into specific tools, it's essential to understand the technology that powers these solutions.

How AI Voice Generation Works

Modern AI voice synthesis combines deep learning algorithms with natural language processing to create human-like voices. Unlike traditional text to speech converter systems that relied on pre-recorded phonemes, today's AI-powered solutions analyze vast amounts of voice data to generate natural-sounding speech patterns, including proper intonation, emphasis, and emotional nuance.

Key Features of Modern Speech Synthesis

Today's AI voice generator platforms offer sophisticated capabilities that make them invaluable for various applications. These features include real-time voice generation, emotion control, and multi-language support. The voice synthesis technology has advanced to the point where generated voices can maintain consistency across long passages while adapting to different contexts and tones.

Benefits of AI Voice Generation

AI voice synthesis offers numerous advantages for businesses and content creators:

- Cost-effective alternative to professional voice actors: Save thousands of dollars on voice talent while maintaining professional quality for your content.

- Consistent voice quality across multiple projects: Ensure your brand voice remains identical across all content pieces, regardless of length or frequency.

- Rapid content creation and iteration: Generate voice content in minutes rather than days, allowing for quick revisions and updates as needed.

- Scalable solutions for multiple languages: Expand your reach globally without the need to hire multiple voice actors for different languages.

- Accessibility improvements for digital content: Make your content accessible to visually impaired users and those who prefer audio consumption.

Essential Features in Speech Synthesis Tools

When evaluating AI text to speech software, several key features determine their effectiveness and usability.

Voice Quality and Naturalness

The most crucial aspect of any voice generation software is the quality and naturalness of the generated voice. Modern systems should produce speech that sounds human-like, with appropriate pacing, intonation, and emotional resonance. This includes handling complex linguistic elements like idioms and context-dependent pronunciation.

Language Support

Global reach requires comprehensive language support. The best voice cloning software offers multiple languages and regional accents, ensuring content can reach diverse audiences effectively. This includes not just translation capabilities but also cultural adaptation of speech patterns and intonations.

Customization Options

Flexibility in voice customization allows creators to match their brand voice or specific project needs. This includes:

- Voice style selection: Choose from a range of voice personalities to match your brand tone and target audience preferences.

- Pitch and speed adjustment: Fine-tune the voice output to create the perfect pace and tone for your specific content needs.

- Emphasis control: Highlight key messages by adjusting word emphasis and sentence stress patterns.

- Emotion manipulation: Add emotional depth to your content by selecting appropriate mood and tone settings.

- Custom pronunciation rules: Ensure proper pronunciation of industry-specific terms and brand names.

File Format Compatibility

Professional voice generation AI tools should support various input and output formats, making them versatile for different use cases and workflows.

Top AI Speech Synthesis Tools for 2025

Let's examine the leading AI voice over generator solutions in the market, comparing their features, capabilities, and use cases.

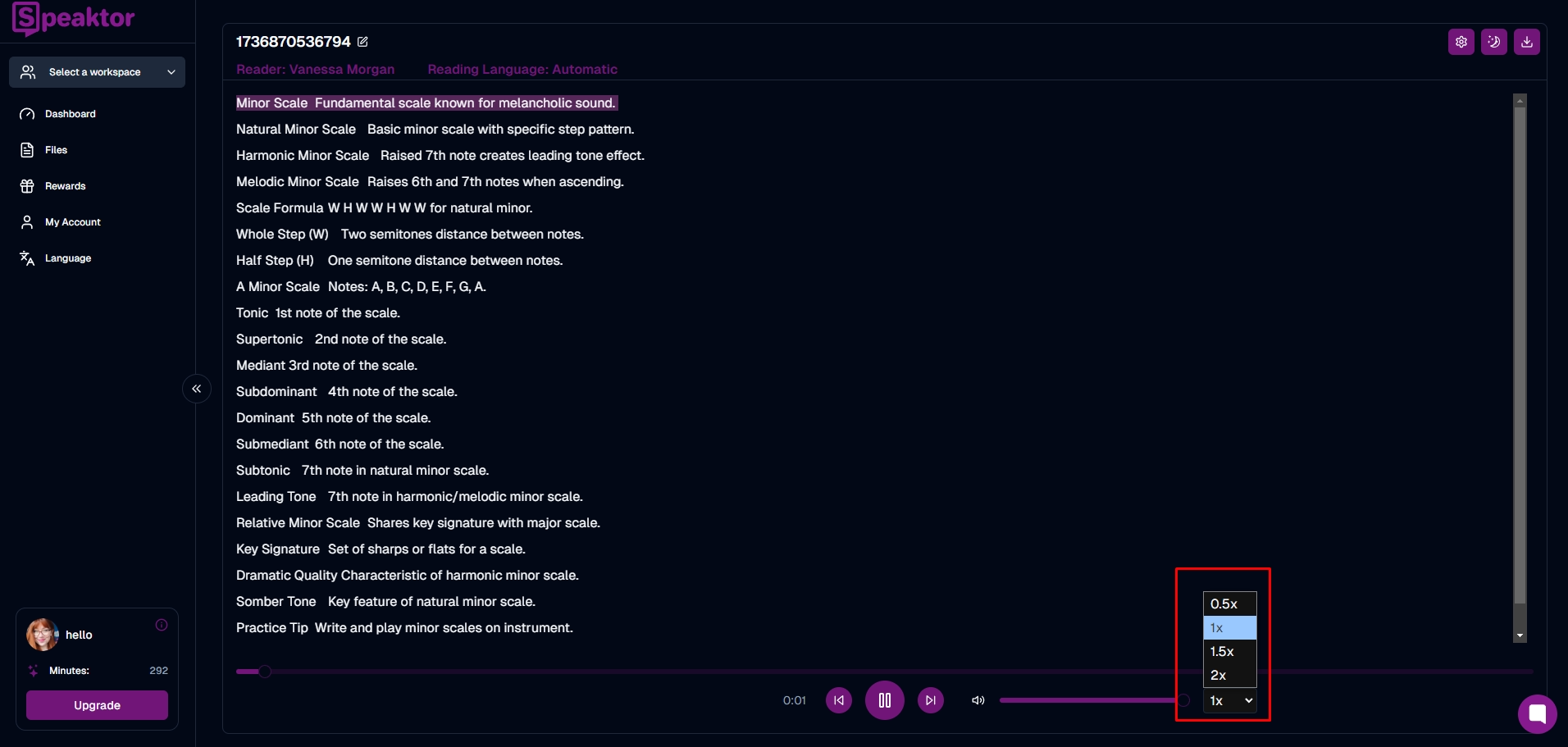

1. Speaktor

Speaktor is designed to serve individuals, professionals, and large enterprises seeking a comprehensive speech synthesis solution. It leverages advanced AI voice assistant capabilities to generate clear, dynamic audio suited for everything from basic narrations to full-scale corporate media. With sophisticated features, Speaktor stands out for its adaptable approach to natural voice generation.

- Over 50 languages: Supports a wide range of accents and dialects, accommodating diverse audience needs.

- Secure workspace organization: Ensures team collaboration and file management under strict data protection standards.

- Multiple output formats: Includes MP3 and WAV options to fit varied distribution channels.

- Professional voiceover creation: Offers multiple speaker choices and adjustable voice parameters for high-quality narrations.

2. Amazon Polly

Amazon Polly taps into AWS infrastructure for powerful and scalable AI voice generation. Its neural text-to-speech engine produces believable speech patterns that adapt to different contexts, an advantage for businesses expanding their content library.

While SSML support grants detailed voice control, a technical background may be necessary to fully utilize Polly’s features. Its pay-as-you-go model suits organizations that anticipate fluctuating demands, allowing them to expand usage without incurring heavy upfront costs.

3. Google Cloud Text-to-Speech

Google’s platform centers on WaveNet-based technology, delivering smooth and natural-sounding voices across numerous languages and accents. It meshes seamlessly with the broader Google Cloud ecosystem, making it a strong choice for those already invested in Google’s suite of tools.

Nevertheless, the service’s developer-oriented design can present challenges to newcomers without a technical background. Anyone seeking advanced customizations or large-scale deployment will find the deep integration possibilities advantageous, but typically at the cost of a steeper learning curve.

4. Microsoft Azure Speech

Microsoft Azure Speech Services combines neural TTS with enterprise-grade cloud security. The ability to train custom voices sets it apart, enabling brands to maintain consistent vocal identities across marketing, support, and educational materials.

Enterprises already aligned with the Microsoft ecosystem often benefit from easy product integration, enhanced by real-time synthesis for chatbots or live applications. Despite its robust features, smaller organizations with minimal Microsoft infrastructure might find setup comparatively complex.

5. Murf AI

Murf AI focuses on straightforward voice generation for creative teams and freelancers. The platform’s clean interface and integrated editor allow users to produce and fine-tune audio content without switching between multiple tools.

Its standout offering is voice cloning, which replicates existing vocal traits for commercial use. Although it may lack the deeper enterprise integration seen in larger platforms, Murf’s user-friendly design and quick-start templates make it popular for fast-paced production environments.

Choosing the Right Speech Synthesis Tool

Selecting the most suitable voice generation AI tool requires a clear understanding of your content objectives, technical environment, and budget constraints. Evaluating factors like language coverage and integration demands ensures your chosen platform meets both immediate needs and future growth. Below are the core considerations and use-case scenarios that guide a well-informed decision.

Step 1: Clarify Your Voice Quality Needs

Defining the level of realism or expressiveness required helps narrow your list of AI text to speech solutions. Simple announcements might only need basic clarity, whereas emotionally driven marketing campaigns demand highly natural voices with nuanced intonation.

- Consider whether you need expressive features like tone adjustments or emotional inflections

- Decide if specialized speech (e.g., corporate, casual) or a brand-specific style is mandatory

- Note any existing brand guidelines that define the tone or persona for voice output

Step 2: Evaluate Multi-Language Capabilities

Multiple languages or dialects may be a priority if you serve international or diverse audiences. Tools offering cultural adaptation—beyond basic translation—can produce more authentic results.

- Check if each language includes localized accents and speech patterns

- Verify that file exporting or usage rights apply to all supported languages

- Look into advanced features (like idiomatic expressions) for nuanced audience targeting

Step 3: Assess Team’s Technical Skill Level

Choose a solution that aligns with your staff’s expertise. Some platforms present user-friendly dashboards, while others rely on APIs or scripting, appealing more to technically inclined teams.

- Confirm whether developers are available to integrate advanced APIs

- Opt for “no-code” solutions if you lack a technical background

- Factor in potential training or onboarding time to fully utilize the tool

Step 4: Ensure Smooth Workflow Integration

A speech synthesis tool should complement existing processes rather than disrupt them. Look for robust compatibility with content management systems, design tools, or project software.

- Determine if bulk processing or batch uploads fit your production cycle

- Check for built-in plugins or add-ons supporting your current software stack

- Confirm how well the solution handles scheduling or automated generation

Step 5: Consider Budget Constraints and Scalability

Balancing costs and potential expansion helps avoid over- or under-spending. Compare pay-per-character models, monthly subscriptions, and annual plans to see which structure aligns with your output volumes.

- Look into possible hidden costs, like API calls or custom voice training

- Enquire about discounts or tier upgrades for scaling usage

- Plan for spikes in demand or seasonal content surges

Step 6: Match the Tool to Your Use Cases

Different voice synthesis solutions cater to varied scenarios, whether enterprise, educational, or marketing-focused. Pinpoint the features that directly address your primary objective.

- Check if the tool supports brand voice consistency for promotional materials

- Ensure voice clarity if content is primarily educational

- Evaluate emotional range and authenticity for creative storytelling purposes

Implementing Speech Synthesis in Your Workflow

To maximize the benefits of AI text to speech software:

- Start with clear voice guidelines: Create a comprehensive voice style guide that defines tone, pace, and pronunciation standards for consistency.

- Establish quality control processes: Implement regular checks and balances to ensure all generated content meets your quality standards.

- Create consistent workflows: Develop standardized procedures for content creation, review, and deployment across teams.

- Plan for scalability: Design your implementation to handle increased volume and additional language requirements as your needs grow.

- Monitor usage and performance: Track key metrics like generation time, quality consistency, and user feedback to optimize your voice content strategy.

Common Pitfalls to Avoid while Implementing Speech Synthesis

Watch out for these common challenges:

- Overlooking pronunciation customization: Ensure proper pronunciation of industry-specific terms by setting up custom dictionaries and pronunciation rules.

- Ignoring file format requirements: Verify compatibility with your target platforms and establish clear guidelines for file formats and quality settings.

- Underestimating processing time: Account for processing time in your content creation timeline, especially for batch processing and long-form content.

- Neglecting backup solutions: Implement robust backup systems and contingency plans for critical voice content generation needs.

- Insufficient testing across platforms: Conduct thorough testing across all target devices and platforms to ensure consistent quality and performance.

Conclusion

AI speech synthesis tools have revolutionized voice content creation, offering unprecedented quality and efficiency. While each platform has its strengths, Speaktor emerges as a comprehensive speech recognition technology solution that balances advanced features with user-friendly operation. Its combination of natural voice quality, extensive language support, and robust workspace organization makes it an excellent choice for businesses seeking professional voice synthesis capabilities.

Take the next step in your voice content creation journey by exploring what Speaktor can do for your specific needs. With its enterprise-grade features and intuitive interface, you can start producing high-quality voice content that engages your audience effectively.